Life

Scientists discovered artificial intelligence's 'kryptonite' that drives it insane

Large language models and image generators (artificial intelligence), as it turns out, literally go crazy when they are tried to be trained on self-generated content. This kind of ouroboros-like (a mythological serpent coiling around the Earth, grabbing itself by the tail) self-consumption causes the digital brain of the generative model to break down.

This is according to a study by scientists from Rice University and Stanford University, available on the arXiv preprints website. Thus scientists have discovered a kind of kryptonite (the stone that turned Superman into an ordinary person, depriving superpowers) of artificial intelligence.

As the researchers explained, significant advances in AI's generative algorithms for images, text, and other types of data led its developers to be tempted to use synthetic data to train next-generation AI models. But no one knew exactly how the AI would react if fed its own creations.

"Our main conclusion from all scenarios is that without sufficient fresh real-world data in each generation of the autophagy (self-absorbing) loop, future generative models are doomed to progressively decrease in quality (accuracy) or diversity," the researchers said in a statement following the study.

They called the condition resulting from such an action MAD (direct translation - madness). The abbreviation comes from Model Autophagy Disorder, which means autophagy disorder in a model.

It is likely that talk of an AI revolution can be put on hold for a bit, because without fresh real data, or more simply, original human work, AI results will become significantly worse.

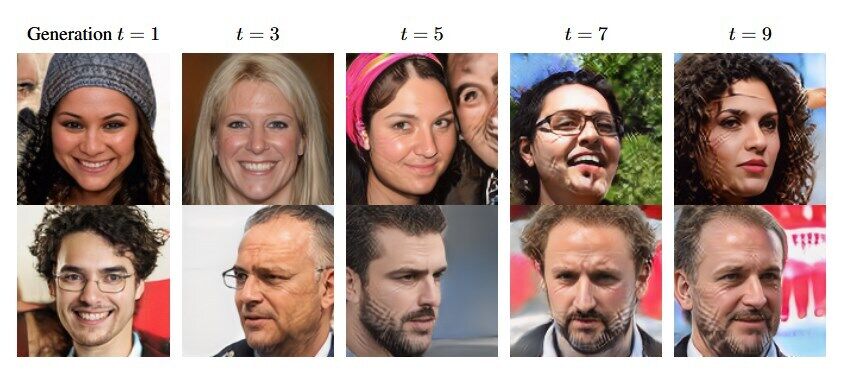

The researchers were able to establish that with repeated training on synthetic content, the AI output becomes more primitive until it becomes monotonous.

The term MAD, coined by the researchers, reflects this process of self-absorption.

As detailed in the paper, the tested AI model only went through five rounds of training with synthetic content before serious problems began to appear.

This situation could be a real problem for OpenAI, which is one of the major players in the AI market right now. It turns out that the company has been using a huge amount of text that was generated by internet users to train its artificial intelligence, often done in violation of copyright law.

And since there are already lawsuits against OpenAI related to illegal use of content, the company needs something of its own making to keep the AI going. But now a situation has arisen where the easiest option that could have saved everything - using something that was created by the AI itself - will do no good.

Earlier OBOZREVATEL also told about what will happen when artificial intelligence reaches the singularity and whether it will be able to kill people.

Subscribe to OBOZREVATEL's channels in Telegram, Viber and Threads to keep up to date.